The User Condition: Computer Agency and Behavior

What are the conditions for a computer user to gain agency, defined here as the ability to evade automatisms? What is the user’s horizon of autonomy within a built world made of software programmed by somebody else, when its logic is made inaccessible in the name of convenience?

“To know the world one must construct it.”

A World of Things

As humans, when we are born we are thrown into a world. This world is shaped by things made by other humans before us. These things are what relate and separate people at the same time. Not only we contemplate them, but we use these things and fabricate more of them. In this world of things, we labor, work and act. Labor was originally understood as a private process for subsistence that didn’t result in a lasting product. Through work, we fabricate durable things instead. Finally, we act: we do things that lead to new beginnings: we give birth, we engage with politics, we quit our job. Nowadays, work looks a lot like labor: like the fruits of the earth, most durable artifacts we surround ourselves with don’t last long. One could arrange these three activities according to a scale of behavior, understood here as a repeated gesture: labor is pure behavior, work can be seen as a modulation of behavior, action is interruption of behavior. Action is what breaks the “fateful automatism of sheer happening”. This is, in a nutshell, Hannah Arendt’s depiction of the human condition.1

Let’s apply this model to computers. If another world, a world within a world, is anywhere to be found, that is inside the computer, since the computer has the ability not only, like other media, to represent things, but to simulate those things, and simulate other media as well. Joanne McNeil points out that “metaphors get clunky when we talk about the internet” because the internet, a network of networks of computers, is fundamentally manifold and diverse. But for the sake of the argument, let’s employ a metaphor anyway. Individual applications, websites, apps and online platforms are a bit like the things that populate a metropolis: whole neighborhoods, monuments, public squares, shopping malls, factories, offices, private studios, abandoned construction sites, workshops, gardens—both walled and not.2 This analogy emerged clearly at the inception of the web, but it became less evident after the spread of mobile devices. Again McNeil: “As smartphones blurred organizational boundaries of online and offline worlds, spatial metaphors lost favor. How could we talk about the internet as a place when we’re checking it on the go, with mobile hardware offering turn-by-turn directions form a car cup holder or stuffed in a jacket pocket?”.3 Nowadays, the internet might feel less like a world, but it maintains the “worldly” feature of producing the more or less intelligible conditions of users. In fact, with and within networked computers, users perform all three kinds of activity identified by Arendt: they perform repetitive labor, they fabricate things, and, potentially, they act, that is, they produce new beginnings by escaping prescribed paths, by creating new ones, by not doing what they are expected to or what they’ve always been doing.

Agency and Automatism

Among the three types of activity identified by Hannah Arendt, action is the broadest, and the most vague: is taking a shortcut on the way to the supermarket a break from the “fateful automatism of sheer happening”? Does the freshly released operating system coincide with “a new beginning”? Hard to say. And yet, I find “action”, with its negative anti-behavioral connotation, to be a more useful concept than the one generally used to characterize positively one’s degree of autonomy: agency. Agency is meant to measure someone’s or something’s “capacity, condition, or state of acting or of exerting power”.4 All good, but how do we measure this if not by assessing the very power of changing direction, of producing a fork in a path. A planet that suddenly escapes its predetermined orbit would appear “agential” to us, or even endowed with intent. An action is basically a choice, and agency measures the capacity of making choices. No choice, on the contrary, is behavior. The addict has little agency because their choice to interrupt their toxic behavior exists, but is tremendously difficult. In short, I propose to define agency as the capacity for action, which is in turn the ability to interrupt behavior.

Here’s a platform-related example. We can postulate a shortage of user agency within most dominant social media. What limits the agency of a user, namely, their ability to stop using such platforms, is a combination of addictive techniques and societal pressures. It’s hard to block the dopamine-induced automatism of scrolling, and maybe it’s even harder to delete your account when all your friends and colleagues assume you have one. In this case, low agency takes the form of a lock-in. If agency means choice, the choice we can call authentic is not to be on Facebook (or WeChat, if you will).

While this is a pragmatic understanding of agency, we shouldn’t forget that it is also a very reductive one: it doesn’t take into account the clash of agencies at play in any system, both human and non-human ones. This text will thus focus on only one ingredient (the user) of the agential soup at play in computers, to borrow an expression from James Bridle.5

Users and Non-Users

We call “user” the person who operates a computer.6 But is “use” the most fitting category to describe such an activity? Pretty generic, isn’t it? New media theorist Lev Manovich briefly argued that “user” is just a convenient term to indicate someone who can be considered, depending on the specific occasion, a player, a gamer, a musician, etc.7 This terminological variety derives from the fact, originally stated by computer pioneers Alan Kay and Adele Goldberg, that the computer is a metamedium, namely, a medium capable of simulate all other media.8 What else can we say about the user? In The Interface Effect Alexander Galloway points out en passant that one of the main software dichotomies is that of the user versus the programmer, the latter being the one who acts and the former being the one who’s acted upon.9 For Olia Lialina, the user condition is a reminder of the presence of a system programmed by someone else.10 Benjamin Bratton clarifies: “in practice, the User is not a type of creature but a category of agents; it is a position within a system without which it has no role or essential identity […] the User is both an initiator and an outcome.”11 Aymeric Mansoux and Marloes De Valk have some ideas on what this user position looks like: whereas on UNIX-based machines the user generally has a name, a home and a clear set of permissions, on Android and Ios the user is just a blind, nameless, homeless process with a numerical identifier.12

Christine Satchell and Paul Dourish recognize that the user is a discursive formation aimed at articulating the relationship between humans and machines. However, they consider it too narrow, as interaction does not only include forms of use, but also forms of non-use, such as withdrawal, disinterest, boycott, resistance, etc.13 With our definition of agency in mind (the ability to interrupt behavior and break automatisms), we might come to a surprising conclusion: within a certain system, the non-user is the one who possesses maximum agency, more than the standard user, the power user, and maybe even more than the hacker. To a certain extent, this shouldn’t disconcert us too much, as often with the ability to refuse (to veto) coincides with power. Frequently, the very possibility of breaking a behavior or not acquiring it in the first place, betrays a certain privilege. We can think, for instance, of Big Tech CEOs that fill the agenda of their kids with activities to keep them away from social media.

Ants

In her essay, Olia Lialina points out that the user preexisted computers as we understand them today. The user populated the minds of people imagining what computational machines would look like and how they would relate to humans. These people were already consciously dealing with issues of agency, action and behavior. A distinction that can be mapped to notions of action and behavior has to do with creative and repetitive thought, the latter being prone to mechanization. Such distinction can be traced back to Vannevar Bush.14

In 1960, psychologist and computer scientist J. C. R. Licklider, anticipating one of the cores of Ivan Illich’s critique15, noticed how often automation meant that people would be there to help the machine rather than be helped by it. In fact, automation was and often still is semi-automation, thus falling short of its goal. This semi-automated scenario merely produces a “mechanically extended men”. The opposite model is what Licklider called “Man-Computer Symbiosis”, a truly “cooperative interaction between men and electronic computers”. The Mechanically Extended Man is a behaviorist model because decisions, which precede actions, are taken by the machine. Man-Computer Symbiosis is a bit more complicated: agency seems to reside in the evolving feedback loop between user and computer. Human-Computer Symbiosis would “enable men and computers to cooperate in making decisions and controlling complex situations without inflexible dependence on predetermined programs”.16 Behavior, understood here as clerical, routinizable work would be left to computers, while creative activity, which implies various levels of decision making, would be the domain of both.

Alan Kay’s pioneering work on interfaces was guided by the idea that the computer should be a medium rather than a vehicle, its function not pre-established (like that of the car or the television) but reformulable by the user (like in the case of paper and clay). For Kay, the computer had to be a general-purpose device. He also elaborated a notion of computer literacy which would include the ability to read the content of a medium (the tools and materials generated by others) but also the ability to write in a medium. Writing on the computer medium would not only include the production of materials, but also of tools. That is for Kay authentic computer literacy: “In print writing, the tools you generate are rhetorical; they demonstrate and convince. In computer writing, the tools you generate are processes; they simulate and decide.”17

More recently, Shan Carter and Michael Nielsen introduced the concept of “artificial intelligence augmentation”, namely, the use of AI systems to augment intelligence. Instead of limiting the use of AI to cognitive outsourcing, it would become a tool for cognitive transformation. In the former case, AI acts “as an oracle, able to solve some large class of problems with better-than-human performance”; in the latter it changes “the operations and representations we use to think”.18

Through the decades, user agency meant freedom from predetermined behavior, ability to program the machine instead of being programmed by it, decision making, cooperation, break from repetition, functional autonomy. This values and the concerns deriving from their limitation were already present since the inception of the science that propelled the development of computers. One of most present fears of Norbert Wiener, the founding father of cybernetics, was fascism. With this word he didn’t refer to the charismatic type of power in place during historical dictatorships. He meant something more subtle and encompassing. For Wiener, fascism meant “the inhuman use of human beings”, a predetermined world, a world without choice, a world without agency.19 Here’s how he describes it in 1950:

In the ant community, each worker performs its proper functions. There may be a separate caste of soldiers. Certain highly specialized individuals perform the functions of king and queen. If man were to adopt this community as a pattern, he would live in a fascist state, in which ideally each individual is conditioned from birth for his proper occupation: in which rulers are perpetually rulers, soldiers perpetually soldiers, the peasant is never more than a peasant, and the worker is doomed to be a worker.

Basically, the fascism so much dreaded by Wiener was feudalism. The image depicted by Wiener is that of a world without choice, where use—the performance of one’s predetermined function— becomes pure labor, and non-use becomes impossible. Fascism manifests as the prevention of non-use.

Impersonal Computing

In the 80’s, Apple came up with a cheery, Coca Cola-like ad with people of all ages from all around the world using their machine for the most different purposes. The commercial ended with a promising slogan: “the most personal computer”. A few decades afterwards, Alan Kay, who was among the first to envision computers as personal devices, was not impressed with the state of computers in general, and with those of Apple in particular.20

As I said, for Kay, a truly personal computer would encourage full read-write literacy. Through the decades, however Apple seemed to go in a different direction: cultivating an allure around computers as lifestyle accessories, like a pair of sneakers. In a sense, it fulfilled more than other companies the consumers’ urge to express their identity. Let’s not, though, look down on the accessory value of a device and the sense of belonging it creates. It should be enough to go to any hackerspace to recognize a similar logic at play, but with a Lenovo (or more recently a Dell) in place of a Mac.

And yet, Apple’s computer-as-accessory actively reduced read-write literacy. Apple placed creativity and “genius” at the surface of pre-configured software. Using Kay’s terminology, Apple’s creativity was relegated to the production of materials: a song composed in GarageBand, a funny effect applied to a selfie with Photo Booth. What kind of computer literacy is this? Counterintuitively, what is a form of writing within a software vehicle, is often a form of reading the computer medium. We only write the computer medium when we do not simply generate materials, but tools. Don’t get me wrong, not all medium writing needs to happen on an old-style terminal, without the aid of a graphical interface. Writing the computer medium is also designing a macro in Excel or assembling an animation in Scratch.

Then, the new millennium came and mobile devices with it. At this point, the hiatus between reading and writing grew dramatically. In 2007 the iPhone was released. In 2010, The iPad was launched. Its main features didn’t just have to do with not writing the computer medium, but not writing at all: among them, browsing the web, watching videos, listening to music, playing games, reading ebooks. The hard keyboard, the “way to escape pre-programmed paths” according to Dragan Espenschied21, disappeared from smartphones.22 Devices had to be jailbroken. Software was compartimentalized into apps. Screens became small and interfaces lost their complexities to fit them. A “rule of thumb” was established. Paraphrasing Kay, simple things didn’t stay simple, complex things became less possible.

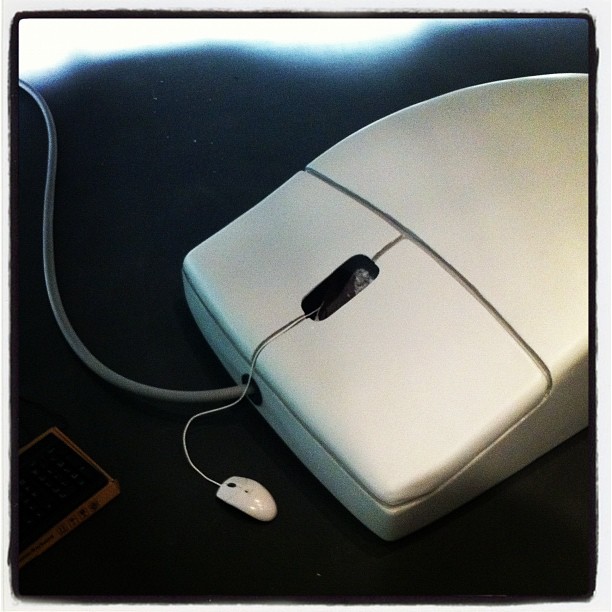

Google Images confirms that we are still anchored to a pre-mobile idea of computers, a sort of skeuomorphism of the imagination. We think desktop or, at most, laptop. Instead, we should think of smartphones. In 2013, Michael J. Saylor stated: “currently people ask, ‘Why do i need a tablet computer or an app-phone [that’s how he calls a smartphone] to access the Internet if I already own a much more powerful laptop computer?’. Before long the question will be, ‘Why do I need a laptop computer if I have a mobile computer that I use in every aspects of my daily life?’”23 If we are to believe the CNBC, he was right. Their recent title was “Nearly three quarters of the world will use just their smartphones to access the internet by 2025”.24 Right now, in the US, a person is more likely to possess a mobile phone than a desktop computer (81% vs 74% in the US according to the Pew Center) and I suspect that globally the discrepancy is higher.25 A person’s first encounter with a computer will soon be with a tablet or mobile phone rather than with a PC. And it’s not just kids: the first computer my aunt used in her life is a smartphone. The PC world is turning into a mobile-first world.

There is, finally, another way in which the personal in personal computers has mutated. The personal became personalized. In the past, the personal involved not just the possession of a device, but also one’s own know-how, a savoir faire that a user developed for themselves. A basic example: organizing one’s music collection. That rich, intricate system of directories and filenames each of us came individually up with. Such know-how, big or small, is what allows us to build, or more frequently rebuild, our little shelter within a computer. Our home. When the personal becomes personalized, the knowledge of the user’s preferences and behavior is first registered by the system, and then made alien to the user themselves. There is one music collection and it’s called iTunes, Spotify, YouTube Music. Oh, and it’s also a mall where advertising forms the elevator music. Deprived of their savoir faire, users receive an experience which is tailored on them, but they don’t know exactly how. Why exactly is our social media timeline ordered as it is? We don’t know, but we know it’s based on our prior behavior. Why exactly does the autosuggest function presents us with that very word? We assume it’s a combination of factors, but we don’t know which ones.

Let’s call it impersonal computing,26 shall we? Its features: computer accessorization at the expense of an authentic read-write literacy, mobile-first asphyxia, dispossession of an intimate know-how. A know-how that, we must admit, is never fully annihilated: tactical techniques emerge in the cracks: small hacks, bugs that become features, eclectic workflows. The everyday life of Lialina’s Turing-Complete User is still rich. That said, we can’t ignore the trend. In an age in which people are urged to “learn to code” for economic survival, computers are commonly used less as a medium than as a vehicle. The utopia of a classless computer world turned out to be exactly that, a utopia. There are users and there are coders.27

A Toaster

An all-inclusive computer literacy for the many was never a simple achievement. Alan Kay and Adele Goldberg recognized this themselves:

The burden of system design and specification is transferred to the user. This approach will only work if we do a very careful and comprehensive job of providing a general medium of communication which will allow ordinary users to casually and easily describe their desires for a specific tool.28

Maybe not many users felt like taking on such burden. Maybe it was simply too heavy. Or maybe, at a certain moment, the burden started to look heavier that it was. Users’ desires weren’t expressed by them within the computer medium. Instead, they were defined apriori within the controlled setting of interaction design: theoretical user journeys anticipated and construed user activity. In the name of user-friendliness, many learning curves might have been flattened.

Maybe that was users’ true desire all along. Or, this is, at least, what computer scientist and entrepreneur Paul Graham thinks. In 2001, he recounted: “[…] near my house there is a car with a bumper sticker that reads ‘death before inconvenience.’ Most people, most of the time, will take whatever choice requires least work.” He continues:

When you own a desktop computer, you end up learning a lot more than you wanted to know about what’s happening inside it. But more than half the households in the US own one. My mother has a computer that she uses for email and for keeping accounts. About a year ago she was alarmed to receive a letter from Apple, offering her a discount on a new version of the operating system. There’s something wrong when a sixty-five year old woman who wants to use a computer for email and accounts has to think about installing new operating systems. Ordinary users shouldn’t even know the words “operating system,” much less “device driver” or “patch.”

So who should know these words? “The kind of people who are good at that kind of thing” says Graham.29 His vision seems antipodal to Kay’s one. Given a certain ageism permeating the quote, one is tempted to root for Kay without hesitation, and to frame Graham (who’s currently 56) as someone who wants to prevent the informatic emancipation of his mother. But is it really the case? The answer depends on the cultural status we attribute to computers and the notion of autonomy we adopt.

We might say, with Graham, that his mother is made less autonomous by some technical requirements she doesn’t need nor want to deal with. For her, having a computer functioning like a slightly smarter toaster is good enough. Most of the computer’s technical complexity, together with its technical possibilities, are alien to her. They are a waste of time and source of worry, a burden. Moreover, in order to continue using her machine, she might be forced to familiarize with a new operating system.

On the other hand, we might say, keeping in mind Kay’s vision, that the autonomy of Graham’s mother was eroded “upstream”, as she has been using the computer as an impersonal vehicle, oblivious of its profound possibilities. If you believe that society at large is at a loss by using the computer as a smart toaster, than you’re with Kay. If you think that is fair, then you’re with Graham. But are these two views really in opposition?

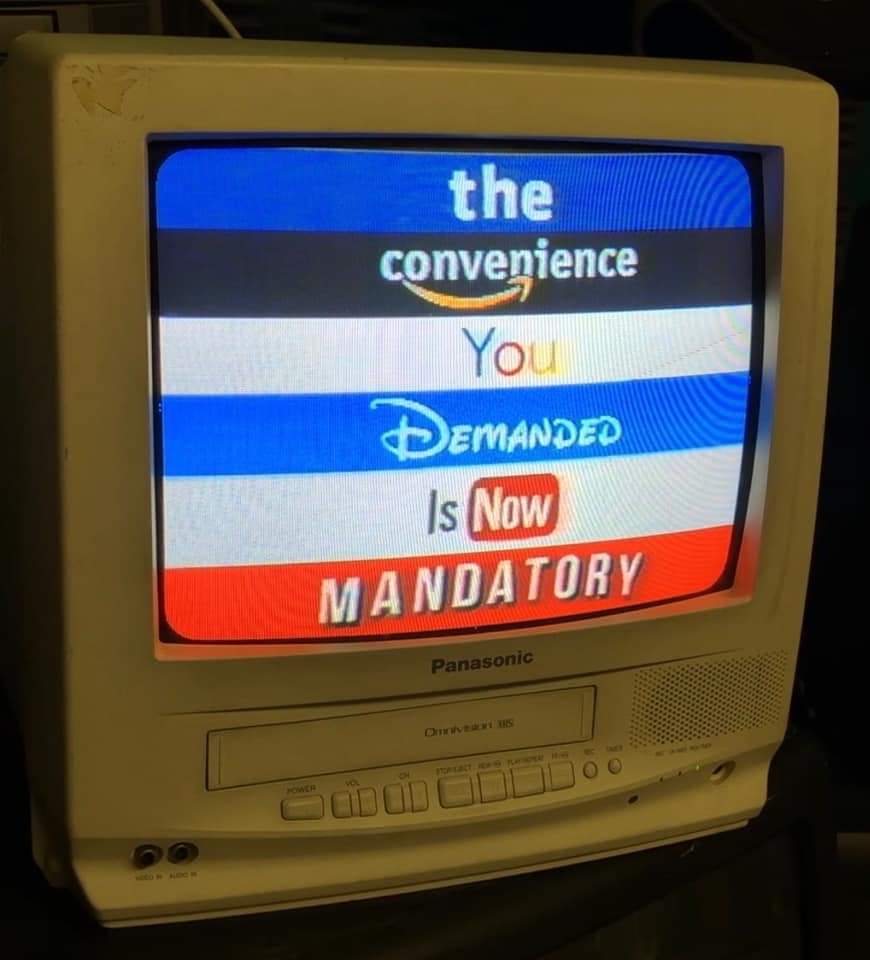

Let’s consider an actual smart toaster, one of those Internet-of-Things devices. Your smart device knows the bread you want to toast and the time that it takes. But, one day, out of the blue, you can’t toast your bread because you haven’t updated the firmware. You couldn’t care less of the firmware: you’re starving. But you learn about it and update the device. Then, the smart toaster doesn’t work anymore as it used to: settings and features have changed. What we witness here is a reduction of agency, as you can’t interrupt the machine’s update behavior. Instead, you have to modify your behavior to adapt to it. Back to Graham’s mother: the know-how she laboriously acquired, the desire for a specific tool that she casually developed through time, might be suddenly wiped out by a change she never asked for. Her vehicle might not have become a full medium, but she inscribed her own way to use it. She built a habit. A habit is an automatism, and as such is of course behavioral. But if “the new beginning” comes from the outside, we don’t have action, just adaptational behavior.

Alan Kay’s motto, once again: “simple things should be simple, complex things should be possible”. Above, we focused on complex things becoming less possible. But what about simple things? Often, they don’t stay simple either. True, without read-write computer literacy a user is stuck in somewhat predetermined patterns of behavior, but the personal adoption of these patterns often forms a know-how. If that is the case, being able to stick to them can be seen as a form of agency. Interruption of behavior means aborting the update.

Misconvenience

The revolution of behavioral patterns is often sold in terms of convenience, namely less work. Less work means less decisions to make. Those decisions are not magically disappearing, but are simply delegated to an external entity that takes them automatically. In fact, we can define convenience as automated know-how or automated decision-making. We shouldn’t consider this delegation of choice as something intrinsically bad, otherwise we would end up condemning the computer for its main feature: programmability. Instead, we should discern between two types of convenience: autonomous convenience (from now on simply convenience) and heteronomous convenience (from now on misconvenience). In the former, the knowledge necessary to take the decision is accessible and modifiable. In the latter, such knowledge is opaque.

Let’s consider two ways of producing a curated feed. The first one involves RSS, a standardized, computer-readable format to gather various content sources. The user manually collects the feeds they want to follow in a list that remains accessible and transformable. The display criteria is generally chronological. Thus, an RSS feed incorporates the user’s knowledge of the sources and automatizes the know-how of going through the blogs individually. Indeed, less work. In this case, it is fair to speak of convenience.

The Twitter feed works differently. The displayed content doesn’t only reflect the list of contacts that the user follows, but it includes ads, replies, etc. The display criteria is “algorhythmic”, that is, based on some factors unknown to the user, and only very partially manipulable by them. This is a case of misconvenience. While convenience is agential since the user can fully influence its workings, misconvenience is behavioral because the user can’t.

Broadly speaking, algorhythmic feeds have mostly wiped out the RSS feed savoir faire, overriding autonomous ways of use. The Overton window of complexity was thus reduced. Today, a novel user is thrown into a world where the algorithmic feed is the default, while the old user has to struggle more to maintain their RSS know-how. The expert is burdened with preserving the space to exercise their expertise, while the neophyte is not even aware of the possibility of such expertise.

Blogs stop serving RSS, feed readers aren’t maintained, etc. It is no coincidence that Google discontinued its Reader product, with the following message on their page: “We understand you may not agree with this decision, but we hope you’ll come to love these alternatives as much as you loved Reader.”30. In fact, Google has been simplifying web activities all along. Cory Arcangel in 2009:

After Google simplified the search, each subsequent big breakthrough in net technology was something that decreased the technical know-how required for self-publishing (both globally and to friends). The stressful and confusing process of hosting, ftping, and permissions, has been erased bit by bit, paving the way for what we now call web 2.0.31

True, alternative do exists, but they become more and more fringe. Graham seems to be right when he says that most user will go for less work. Generally, misconvenience means less work than autonomous convenience, as the maximum amount of decisions is taken by the system in place of the user. Furthermore, misconvenience dramatically influences the perception of the work required by autonomous convenience. Nowadays, the process of collecting RSS feeds URLs appears tragically tedious if compared to Twitter’s seamless “suggestions for you”.

Barack vs Mitch

On Twitter, we can experience the dark undertones of heteronomous convenience. User Tony Arcieri designed a worrisome experiment about the automatic selection of a focal point for image previews, which often show only a part of them when tweeted.32 Arcieri uploaded two versions of a long, vertical image. In one, a portrait of Obama was placed at the top, while one of Mitch McConnell at the bottom. In the second image the positioning was reversed. In both cases the focal point chosen for the preview was McConnell’s face. Why did that happened? Who knows!33 The system spares the user the time to make such choice autonomously but its logic is obscure and immutable. Here, convenience is heteronomous.34

Does it have to be this way? Not necessarily. Mastodon is an open source, self-hosted social network that at the first glance looks like Twitter, but it’s profoundly different. One of the many differences (which I’d love to describe in detail but it would be out of the scope of this text, srry) has to do with focal point selection. Here, the user has the option to choose it autonomously, which means manually. They can also avoid making any decision. In that case, the preview will show the middle of the image by default.

Click, Scroll, Pause

Misconvenience is an automated know-how, a savoir faire turned into a silent procedure, a set of decisions taken in advance on behalf of the user. Often, this type of convenience goes hand in hand with the removal of friction, that is, laborious decisions that consciously interrupt behavior. Let’s consider a paginated set of items, like the results of a Google Search or DuckDuckGo query. In this context, users have to consciously click on a button to go to the next page of results. That is a minimal form of action, and thus, of friction. Infinite scroll, the interaction technique employed by, for instance, Google Images or Reddit, removes such friction. The mindful action of going through pages is turned into a homogeneous, seamless behavior.

And yet, this type of interaction seems somehow old-fashioned. Manually scrolling an infinite webpage feels imperfect, accidental, temporary if not already antiquated, even weird one could say: it’s a mechanical gesture that fits the list’s needs and structure. It’s like turning a crank to listen to a radio. It’s an automatism that hasn’t been yet automatized. This automatism doesn’t produce an event (such as clicking on a link) but modulates a rhythm: it’s analog instead of digital. In fact, it has been already automatized. Think of YouTube playlists which are reproduced automatically, or Instagram stories (a model originated in Snapchat that spread to Facebook and Twitter), where the behavior is reversed: the user doesn’t power the engine, but instead stops it from time to time. In the playlist mode, “active interaction” (a pleonasm only in theory) is an exception.

We see here a progression that is analogous to that of the Industrial Revolution: first, some tasks are just unrelated to one another (hyperlinks and pagination, pre-industrial), they are then organized to require manual and mechanical labor (infinite scroll, industrial), finally they are fully automated and only require supervision (stories and playlists, smart factory). Pagination, infinite scroll, playlist. Manual, semi-automated, fully automated. Click, scroll, pause.

| Feature | Platform | Factory |

|---|---|---|

| repetitive, semi-automatic, “mindless” gestures | infinite scroll, swipe | assembly |

| movement without relocation | feed (the user doesn’t leave the page) | conveyor belt (the worker doesn’t leave their position) |

| externalised, opaque, inaccessible knowledge (savoir) | algorithm (arranging data into lists) | industrial know-how (arranging parts into objects) |

Late French philosopher Bernard Stiegler focused on the notion of proletarianization: according to him, a proletarian is not just robbed of the form and the products of their labor, but especially of their know-how.35 Users are deprived of the rich, idiosyncratic fullness of their gestures. These gestures are then reconfigured to fit the system’s logic before being made completely useless. The gesture is first standardized and then automated. The mindless act of scrolling is analogous to the repetitive operation of assembling parts of a product in a factory. Whereas the worker doesn’t leave their position, the user doesn’t leave the page. Both feature movement without relocation. Furthermore, in the factory, machines are organized according to an industrial know-how which makes it the only one that fully understands the functional relationships between parts. How do we call a computational system organized like such factory? We can call it a platform and define it as a system that extracts and standardizes user decisions before rendering them unintelligible and immutable. In the platform, opaque algorithms embody the logic that arranges data into lists that are then fed to the user. The platform-factory is smart and dynamic, the user-worker is made dumb and static.

Hyperlinearity

Who hasn’t caught themselves daydreaming while scrolling? Stiegler, backing art critic Jonathan Crary, maintains that the new proletarianized environments tend to eliminate “those intermittences that are states of sleep and daydreaming”. But, for the time being, it seems that attention, although under siege, is not fully captured and thus intermittence is still at hand. A fully behavioral territory, with its anesthetizing repetitiveness, might be in fact the most suited for zoning out. While behavior takes place on the screen, content blurs and action unfolds in the mind. This might be the general state of proletarianized interactivity. After all, most people take a break from their cognitive work by scrolling feeds or watching stories.

Proletarianized interactivity is hyperlinear. A typical feature of the assembly line is, as the name suggest, linearity. The informational equivalent of the assembly line is the list. Both standalone and networked computers highlighted at first the possibility to break from the linearity of the list, a quality inherited from the printing press. E-literature celebrated this revolution. HyperCard-designed novels emerged, with many paths and no single origin nor conclusion. The early web could be understood as a giant collaborative hypertext. Keeping everything together was the hyperlink, the building block of nonlinearity. But nonlinearity (or multilinearity) requires a higher cognitive load, as navigation doesn’t fade into the background. The user is tasked with many decisions, which are enacted by clicking. Then, Web 2.0 came and webpages became more dynamic, more interactive, but also more behavioral. Multilinearity was defeated by an AJAX-fuelled convenientist drive. The outcome was hyperlinearity. Hyperlinearity is the networked linearization of disparate content, sources and activities into list form: personal photos, articles, discussions, polls, adverts, etc. Sure, a user can still click their way out, but that feels more like sedentary zapping than an active exploration of networked space. From Facebook to Instagram to Reddit and back again. That is hyperlinear zapping, especially evident in the compartimentalized structure of mobile computers.

Speaking of zapping, in 2012 I created ScrollTV, a plugin that would automate scrolling on social media while playing a muzak soundtrack. The project was highly inspired by “Television Delivers People”, a 1973 video by Richard Serra and Carlota Fay Schoolman, in which the passive role of the TV spectator is revealed through the language of broadcasting media themselves. But there are many other projects that more or less directly comment on this semi-automatization of interaction, such as a rubber finger, that “swipes right on Tinder so you don’t have to”. Or another one, definitely less subtle, that uses a rolling piece of meat instead. In a 2017 project by Stephanie Kneissl and Max Lachner, physical automation is used to trick social media algorithm, but the machines also mirror the semi-automated labor performed by humans on a daily basis. More recently, Ben Grosser created the Endless Doomscroller, specifically made for mobile, which comments on the compulsion to browse bad news on social media. This work reminds me of the older Infinity Contemporaneity Device (2012) by Brendan Howell, which consist of a giant mouse with a rolling wheel creating an idiosyncratic news experience. Finally, in 2015 two years of the Tumblr blog CLOAQUE were printed (the size of a football field) as a paper scroll attached to a crank-activated wooden machine. This latter case foregrounds manual labor but also the creative opportunities that hyperlinearity can offer: exploiting the homogeneity of the list for an explosion of diverse visual material.

Capitalism vs. Modernization

To Shoshana Zuboff, the feudo-fascist society feared by Norbert Wiener is today a reality. She writes: “Many scholars have taken to describing these new conditions as neofeudalism, marked by the consolidation of elite wealth and power far beyond the control of ordinary people and the mechanisms of democratic consent.”36 According to the US scholar, the culprit is to be found in a new breed of capitalism capable of extracting profit from the “behavioral surplus” generated by user, who are unconsciously tracked by digital platforms and smart devices alike.

Surely, the structure of Zuboff’s “surveillance capitalism” foments a logic of extraction from standardized attention traps, however hyperlinearity and techno-proletarianlization might be the mere result of industrial rationalization, or modernization. As Italian theorist Raffaele Alberto Ventura explains,

The dynamic of modernization consists in the increasing outsourcing of regulatory functions from the social sphere to the techno-administrative sphere: everything that was previously managed through informal, non-codified, traditional norms is progressively transferred to a specific class of “competent” individuals who indicate the most rational options in every field: economics, urban planning, psychology, health, public order, etc.37

We might add that norms and behaviors are not just transferred to a group of people but also to the systems that these people build and make available: interrelated product, services, interfaces. Convenience is another term for this rationalized restructuring. However, a question emerges: whose ratio informs the systems? Some people might argue that capitalism and modernization are inseparable 38, and that could be a correct assessment, but distinguishing these two souls helps us to foreground one aspect over another: misconvenience over surveillance and extraction.

Speedrun

Misconvenience is about closing things up: limiting gestures, minimizing choices, reducing options. This might appear a purely coercive endeavor, but we shouldn’t forget that options, choices and gestures are costly time-wise. When Alan Kay was speaking of the burden of system design and specification offloaded to the user, he was speaking about time and expertise, the latter being also ultimately about time.

Each added option generates a new viable path. The stratification of paths is what makes a system look like a world. Not only something meaningful that is built, but also something in which one can exercise a degree of free will. Let’s consider videogames. An open world game like The Legend of Zelda: Breath of the Wild is a lot about exploring and enjoying landscapes, taking different directions, getting lost. People spend hundreds of hours on this game (I spent 70h). But one can also finish the game in less that 30 minutes. On the one hand a calm, lengthy discovery journey, on the other a so-called speedrun.

I’d say that computer use is mostly carried out in speedrun mode. This might have to do with the fact that for the most people, the computer turned from medium to tool (or vehicle, to use Kay’s words). Nowadays, people use the computer (and by that I also mean the smartphone) to achieve a specific goal and not to dwell an ambient (the exception being social media). It doesn’t matter if they spend most of their days in front of it: most of them remain at read level, seldom reaching read-write level, which is the level of medium. By using prepackaged software that doesn’t allow its own reprogrammability, we interact with content. When a read-write issue emerges, it appear as a bug or a nuisance, a time-sucking problem.

It becomes obvious, then, that in order to nurture a sense of open-worldliness, which can be translated, in computer terms, as general-purposeness, one needs time. Any talk of general-purpose and true computer literacy should be accompanied by a reflection on available time. Discussing the privacy issues of Google Street View, Joanne McNeil puts it this way: “a person—a user—can hardly rail against technology forever, when it is widely deployed. It isn’t normalization, exactly, but the nature of priorities in a busy life.”39 In fact, busyness is probably what determines most of our technological habits.

Now, a user who invests time on computing shouldn’t necessarily do things by hand instead of automating them. Automation is not misconvenient by default. According to a cliche, a programmer/coder/geek will often spend more time automating a repetitive task than what it would require them to do it by hand. They, just like me when I play Zelda, don’t stress out if they deviate from their main mission. And that’s a luxury.

Netstalgia

Nowadays, we long for the good ol’ web, the bulky desktop computer, the screeching 56k modem. We live in a time of netstalgia. The netstalgic era might have in fact already lasted for a decade. The evidence is abundant: from the success of the confusing notion of brutalist web design,40 to the launch of networks like Neocities (2013) and Tilde.Club (2014), from gardening metaphors applied to websites, to a new agey faith creed worshipping “HTML energy”. In 2015, artist and writer J.R. Carpenter evoked “the term ‘handmade web’ to suggest [among other things] slowness and smallness as a forms of resistance.”41

<p>But what are we nostalgic about specifically? Netstalgia has not to do as much with a precise aesthetic or to an age of innocence that it supposedly symbolizes (<i>the internet was never innocent<\i>), as it has to do with a time, idealized by memory, in which the convenience trade-off wasn’t so stringent. A time in which the “burden of system design and specification”, wouldn’t feel so heavy, because there was nothing faster to compare it to, and because the speedrun wasn’t the default mode of computing. All these aspects added up to what feels like a truly personal experience.</>

The risk with netstalgia is to foster a misunderstanding, namely, that in order to escape speedrun mode and misconvenience one has to revert to hand-written HTML, with no automation whatsoever and no programming at all. As a consequence of such misunderstanding, “friction” is glorified: things not working or taking too much time are good as they meant to cause an epiphany in which the smooth interfaces are finally demystified.

Don’t get me wrong: I like HTML. I can appreciate a simple, hand-written website. I understand the allure of poor media.42 But I can also enjoy seeing the computer making ten thousand times the same mistake, after I programmatically asked it to make it (check the console 🤓). Also, a degree of automation is generally embedded in the tools we might use to craft a handmade websites. Or are we going to reject syntax autocomplete for the sake of true DIY?

I think we shouldn’t eulogize friction for friction’s sake, because friction, in itself, is just user’s frustration. We have to be able to recognize elegance and generate autonomous convenience for ourselves. We shouldn’t deny a computer the possibility to take decisions for us, we just have to be aware of how such decision-making takes place. Programmability is still at the core of computers, and that is where we can find full read-write computer literacy.

In a way, netstalgia already hints at this. Etymologically, nostalgia refers to the pain of not being able to come back home. The concept of home is crucial. We conveniently arrange the things in our home so that we can create our routines (some would say program our behavior). Those routines give stability and durability to our everyday life. Remember the toaster? Netstalgia might then be nostalgia for non-predetermined computer behavior, for the computer as a home that is not fully furnished in advance.

Agency Not Apps

Founded by programmer Bret Victor, Dynamicland is a “non-profit long-term research group in the spirit of Doug Engelbart and Xerox PARC.” Their goal is to invent a new computational medium where the user doesn’t interact only with symbols on screens but with material objects in the physical space. Few lines ago I stated that a serious discussion on computer literacy can’t ignore the issue of time. Well, Dynamicland’s time-frame is 50 years. What follows, then, will come as no surprise: Alan Kay was somehow involved in the project.

The new computational medium envisioned by Dynamicland is not a device but a place, an environment. This environment is both physical and virtual. It looks like a beautiful, communal mess. Within it, users (kids, adults, the elderly) interact with paper, toys, pens and, of course, with each other. Because togetherness and participation is valued by the folks at Dynamicland. Borrowing from gaming, they say that’s “as multiplayer as the real world.”

How to encourage participation? Dynamicland’s computational media are not intimidating, don’t look crystallized once and for all, they “feel like stuff anyone can make”. Quite the opposite of today’s impersonal computing, where interfaces, even if they constantly change, they appear set in stone. Dynamicland puts it very clearly by saying that “no normal person sees an app and thinks ‘I can make that myself.’” They want agency, not apps.

Not unlike Dynamicland’s media, netstalgic homepages, with their “under construction” gifs, often look changeable, not solidified in an organic cycle of invisible updates.43 They look like a thing that’s built, and not just built, but built by someone. With these websites we inherit a world that’s incomplete and therefore open to new beginnings. Whereas we might feel stuck in a behavioral present of impersonal computing performed in speedrun mode, we can find agency both in the netstalgic past and the future of communal computation.

Acknowledgments

I’d like to thank Vanessa Bartlett, Marloes de Valk, Olia Lialina, Geert Lovink, Gui Machiavelli, Sebastian Schmieg and Thomas Walskaar for helping me out in different ways with this essay. The research process found its home on the Lurk instance of Mastodon (couldn’t have asked for anything better!), so I’d like to thank all Lurk users (as well as the ones from neighboring instances) for their patience while I was broadcasting my half-baked thoughts. Some of these users enthusiastically responded to my toots and thought along with me. This energized me and made my writing sharper. This is why I’m thankful to Brendan Howell, Aymeric Mansoux, Jine, David Benqué, Roel Roscam Abbing, JauntyWunderKind, Adrian Cochrane, Benjamin, Fionnáin, Luka, frankiezafe, paolog, Nolwenn and many many others.

This project inhabited also another home: the 2020 research group of KABK, generously orchestrated by Alice Twemlow. My gratitude goes to her and to my fellow researchers: Hannes Bernard, Katrin Korfmann, Vibeke Mascini and Dirk-Jan Visser.

Related Texts

As I was saying, I spent quite some read/write time on Mastodon while working on this. Since the beginning, I collected all the ideas and questions related to The User Condition under a mastodontic thread. This has been my mode of thinking out loud, so please don’t judge :)

The structured pieces of writing mostly ended up on my Entreprecariat blog. Here’s a list of the posts:

- How to Name Our Computer Monoculture?, May 13, 2020

- On Movement and Relocation, May 11, 2020

- A Mobile First World, April 28, 2020

- User Proletarianization, a Table, April 13, 2020

- A Tentative Chronology of the Industrialization of Web Interfaces, March 11, 2020

- Infinite Scroll and the Proletarization of Interaction, February 20, 2020

Oh, and one of them (my favorite) was also published on npc.cafe, a text repository about videogames.

Repository

Soon, maybe, I will make the code publicly available so take this as a note to my future self.

Arendt, Hannah. 2018 [1958]. The Human condition. Chicago: University of Chicago Press. Arendt, Hannah. 2016 [1958]. On Revolution. London: Faber & Faber.↩

“One can easily look at the development of the WWW—with its lightning-fast means of communication, its rapid commercialization in the mid-1990s and its accessibility to a huge audience—in relation to this notion of the metropolis. […] In an environment like this, the number of possible contacts for each person has grown far beyond what could be expected in a large city. Yet just like in a large city, the Internet’s anonymous structures have resulted in impersonal modes of communication.” Knopf, Dennis. 2009. “Defriending the Web.” In Digital Folklore, edited by Dragan Espenschied and Olia Lialina. Stuttgart: Merz&Solitude.↩

McNeil, Joanne. 2020. Lurking: How a Person Became a User. New York: MCD.↩

“Agency.” Merriam-Webster.com Dictionary.↩

Bridle, James. 2019. New Dark Age: Technology and the End of the Future. London; Brooklyn, NY: Verso.↩

The notion of user is clearly a reductive one, but that’s not much because it sees the agent only in functional terms (e.g. usability). The real reduction has to do with positioning such agent only within the computer system, while they inhabit more systems at once. Nobody would be surprised by a computer user who cries on Skype, laughs while reading a silly wifi name, or gets mad at the blue screen of death. In this sense, the Augustinian fruor (enjoyment) happens within utor (use). Thanks to Salvatore Iaconesi who encouraged me to think along these lines.↩

Manovich, Lev. “How Do You a Call a Person Who Is Interacting with Digital Media?” Software Studies Initiative (blog), July 19, 2011.↩

Kay, Alan, and Adele Goldberg. “Personal Dynamic Media.” Computer, 1977.↩

Galloway, Alexander R. The Interface Effect. Cambridge, UK; Malden, MA: Polity, 2012.↩

Lialina, Olia. “Turing Complete User,” 2012.↩

Bratton, Benjamin H. The Stack: On Software and Sovereignty. 1st edition. Cambridge, Massachusetts: The MIT Press, 2016.↩

Private conversations via mail, phone, XMPP chat, telepathy.↩

Satchell, Christine, and Paul Dourish. “Beyond the User: Use and Non-Use in HCI.” In Proceedings of the 21st Annual Conference of the Australian Computer-Human Interaction Special Interest Group: Design: Open 24/7, 9–16. OZCHI ’09. New York, NY, USA: Association for Computing Machinery, 2009.↩

“But creative thought and essentially repetitive thought are very different things. For the latter there are, and may be, powerful mechanical aids”. Bush, Vannevar. “As We May Think.” The Atlantic, July 1, 1945.↩

Illich, Ivan. Tools for Conviviality. London: Marion Boyars, 2001.↩

Licklider, J. C. R. “Man-Computer Symbiosis.” IRE Transactions on Human Factors in Electronics HFE-1, no. 1 (March 1960): 4–11.↩

Kay, Alan. “User Interface: A Personal View.” In The Art of Human-Computer Interface Design, edited by Laurel Brenda, 191. Reading: Addison-Wesley, 1989.↩

Carter, Shan, and Michael Nielsen. “Using Artificial Intelligence to Augment Human Intelligence.” Distill 2, no. 12 (December 4, 2017): e9.↩

Wiener, Norbert. The Human Use Of Human Beings: Cybernetics And Society. New edition. New York, N.Y: Da Capo Press, 1988.↩

Merchant, Brian. “The Father Of Mobile Computing Is Not Impressed.” Fast Company, September 15, 2017.↩

Espenschied, Dragan. “Where Did The Computer Go.” In Digital Folklore. Lialina, Olia and Espenschied, Dragan (eds.). Stuttgart: Merz&Solitude, 2009. Espenschied is also the one who developed the footnote display system used in this webpage, which is the best solution I could find for showing footnotes on both big and small screens.↩

The discreteness of keyboards resonates with decision-making and therefore action. “I choose a key, I decide on a key. I decide on a particular letter of the alphabet in the case of a typewriter, on a particular note in the case of a piano, on a particular channel in the case of a television set, or on a particular telephone number. The President decides on a war, the photographer on a picture. Fingertips are organs of choice, of decision.” Flusser, Vilém. 2017. “The Non-Thing 2.” In The Shape of Things: A Philosophy of Design. London: Reaktion Books.↩

Saylor, Michael J. 2013. The Mobile Wave: How Mobile Intelligence Will Change Everything. Hachette UK.↩

Handley, Lucy. 2019. “Nearly Three Quarters of the World Will Use Just Their Smartphones to Access the Internet by 2025.” CNBC, January 24, 2019.↩

“Demographics of Mobile Device Ownership and Adoption in the United States.” n.d. Pew Research Center (blog). Accessed January 19, 2021.↩

Or “mainstream computing”, “computer convenientism”, “the valley of wretched conformity”, “Californian cloud consensus”… These are some of the terms people came up with when I tried to explain my trouble defining the contemporary computer culture, which can be understood as monoculture despite its apparent diversity and plurality. The full list is here.↩

It’s worth pointing out that coders themselves help maintaining such class division. " I’ve noticed that when software lets nonprogrammers do programmer things, it makes the programmers nervous. Suddenly they stop smiling indulgently and start talking about what ‘real programming’ is. This has been the history of the World Wide Web, for example. Go ahead and tweet ‘HTML is real programming, and watch programmers show up in your mentions to go, ’As if.’ Except when you write a web page in HTML, you are creating a data model that will be interpreted by the browser. This is what programming is." Ford, Paul. 2020. “‘Real’ Programming Is an Elitist Myth.” Wired, August 18, 2020.↩

Kay, Alan, and Adele Goldberg, op. cit.↩

Graham, Paul. 2001. “The Other Road Ahead” (blog). September 2001.↩

Arcangel, Cory. 2009. “Everybody Else.” In Digital Folklore, edited by Olia Lialina and Dragan Espenschied. Stuttgart: Merz&Solitude.↩

The account of Arcieri has been then suspended. Twitter’s reply to him: “We tested for bias before shipping the model & didn’t find evidence of racial or gender bias in our testing. But it’s clear that we’ve got more analysis to do. We’ll continue to share what we learn, what actions we take, & will open source it so others can review and replicate.” Another user’s suggestion: “Here’s an easier way: Take the middle of the picture. That’s it. No bias.”↩

Given the history of racist bias in optical devices, it is legitimate to suspect that there is a racist component here as well. See Bridle, James. 2019. New Dark Age: Technology and the End of the Future. London; Brooklyn, NY: Verso. Pp. 143-144.↩

I’d like to thank Joseph Knierzinger for pointing this case-study to me.↩

Stiegler, Bernard. 2016. Automatic Society. Vol. 1: The Future of Work. Cambridge: Polity Press.↩

Zuboff, Shoshana. 2020. The Age of Surveillance Capitalism: The Fight for a Human Future at the New Frontier of Power. New York: PublicAffairs.↩

Ventura, Raffaele Alberto. Radical choc: ascesa e caduta dei competenti. Torino: Einaudi, 2020. Translation mine.↩

For instance, according to Max Weber there is no full understanding of capitalism without the inclusion of economic rationalization. Cfr. Weber, Max. The Protestant Ethic and the Spirit of Capitalism. Wilder Publications, 2018. Preliminary note.↩

McNeil, Joanne. op. cit.↩

Pascal Deville, who founded Brutalist Websites, speaks of brutalism as “a reaction by a younger generation to the lightness, optimism, and frivolity of today’s web design.” Besides the pervasiveness of cute corporate illustrations, I struggle to see much lightness and frivolity in today’s web design. And to a certain extent, I notice more optimism in what Deville would call brutalist websites than elsewhere. The websites collected by Deville are too diverse to derive from them an understanding of what brutalist means. It might simply be that the label is stronger than the content.↩

Carpenter, J. R. 2015. “A Handmade Web.” March 2015.↩

Lorusso, Silvio. 2015. “In Defense of Poor Media.” Post-Digital Publishing Archive. May 27, 2015.↩

“Neither the”Under Construction" sign nor the idea of permanent construction made it into the professional web. The idea of unfinished business contradicts the traditional concept of professional designer-client relations: fixed terms and finished products." Lialina, Olia. 2005. “A Vernacular Web. Under Construction.”, art.teleportacia.org, 2005-2010.↩